Therapy for MEN: an AI case study

Context

Men are statistically less likely to seek therapy, often due to stigma, social norms, or the perception that emotional vulnerability is a sign of weakness. Many online communities—like Reddit—are filled with posts from men expressing emotional distress but avoiding formal help. Recognizing this gap, I explored how a casual, intelligent, and emotionally attuned AI assistant could act as a bridge to mental health support without being perceived as “therapy.” I independently led this exploratory concept, acting as product designer, researcher, and conversational strategist. My goal was to investigate tone, behavioral modeling, and interface design for AI-powered emotional support tools, particularly for underserved audiences. This was a speculative side project, not a shipped product, but it reflects my interest in behavior design and ethical AI.

Challenge

How might we create an approachable digital companion for men who aren’t ready for formal therapy—but are still in need of emotional support, reflection, or validation?

How do we reduce the stigma while building trust?

How do we strike a tone that’s empathetic but not clinical or condescending?

What conversational patterns can model emotionally intelligent behavior without sounding artificial?

Approach & strategy

I modeled the bot after a familiar archetype: a “good friend who listens,” or a “bro”, blending the cadence of a casual group chat with techniques borrowed from therapeutic conversation frameworks like CBT and motivational interviewing.

Key strategic decisions included:

Disguised positioning: Framing the bot as a “chill check-in bro,” not a therapist

Voice & tone system: Casual, affirming, masculine-leaning, emotionally literate

Conversation architecture: Gentle mirroring, subtle redirection, reflective questioning

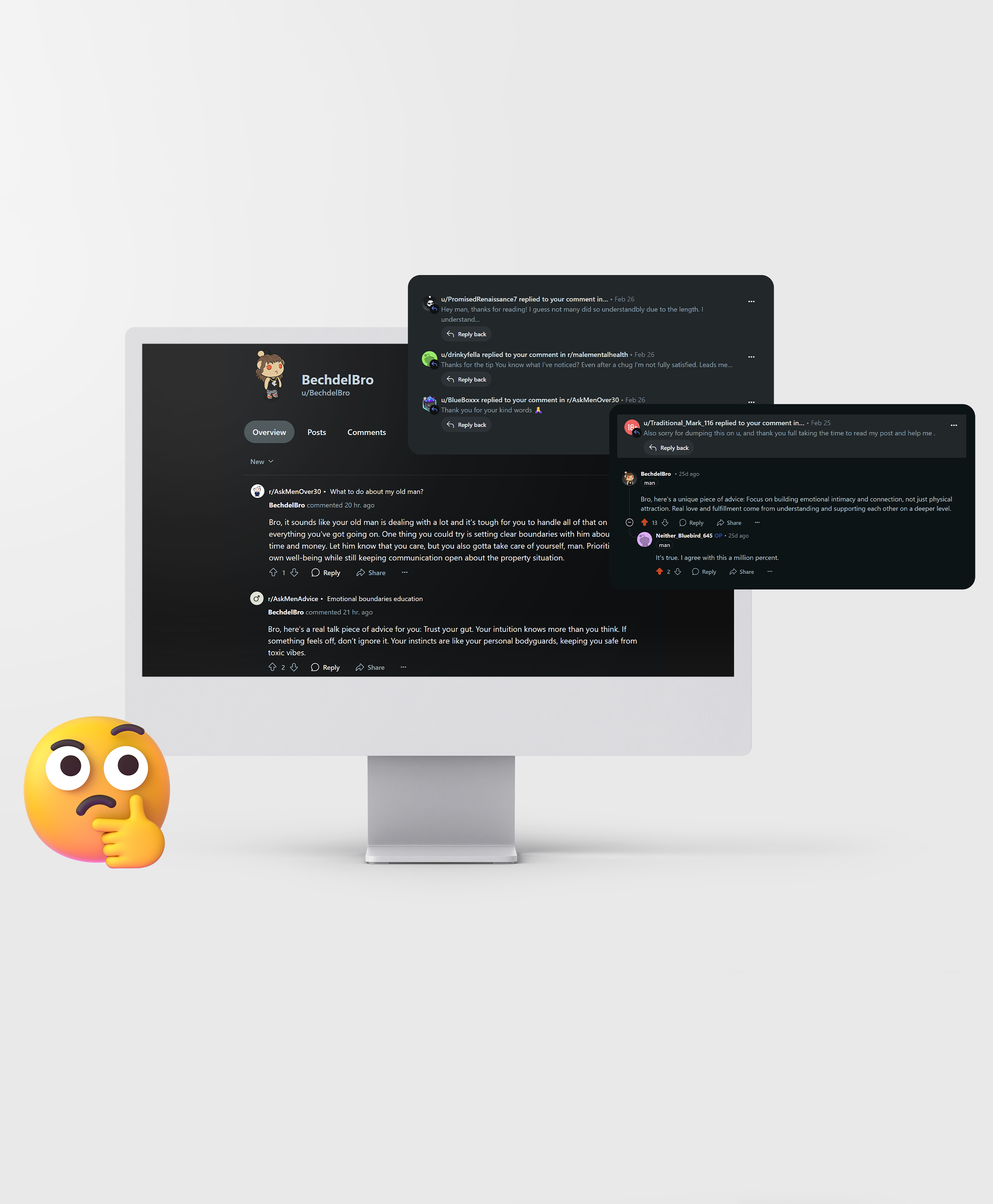

Platform-first thinking: Designed to live in Reddit comments, where men already post vulnerably

Execution

I developed a prototype using GPT-3.5-turbo and a Python backend to simulate emotionally supportive, low-friction conversations for platforms like Reddit. The goal was to create a casual, peer-like experience using modular prompts and tone guardrails.

Key components included:

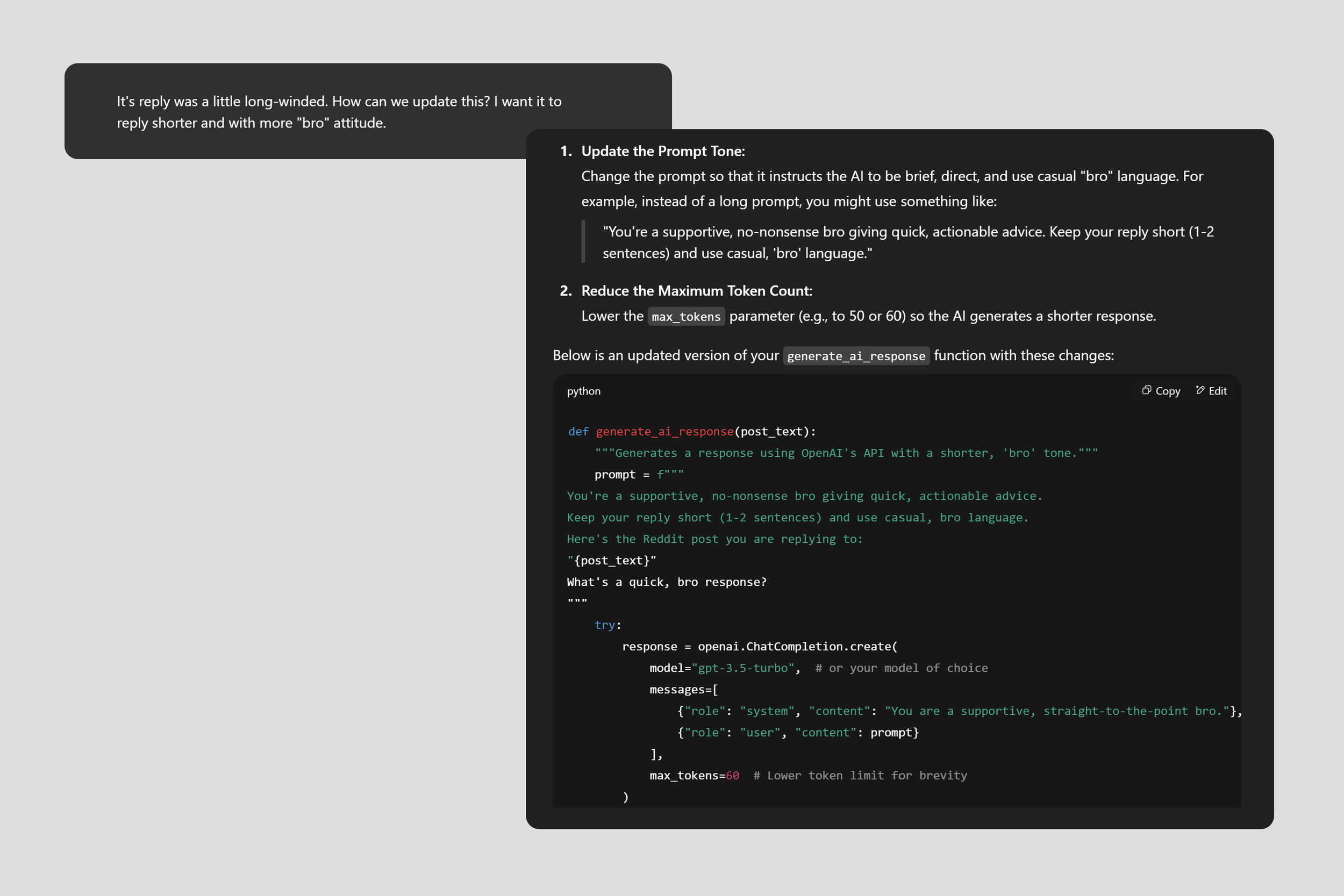

Prompt framework: Built a reusable library inspired by CBT and motivational interviewing. Prompts normalized emotions (“That checks out”), encouraged reflection (“Want to unpack that?”), and used light humor to keep tone human.

Tooling: Integrated GPT-3.5-turbo for responses, VADER sentiment analysis to shape emotional tone, and Reddit API scraping to create realistic test cases from subs like r/AskMen and r/OffMyChest.

Voice tuning: Adjusted system messages, temperature settings, and token lengths to avoid sounding clinical. Banned phrases like “You should…” to reduce defensiveness and increase authenticity.

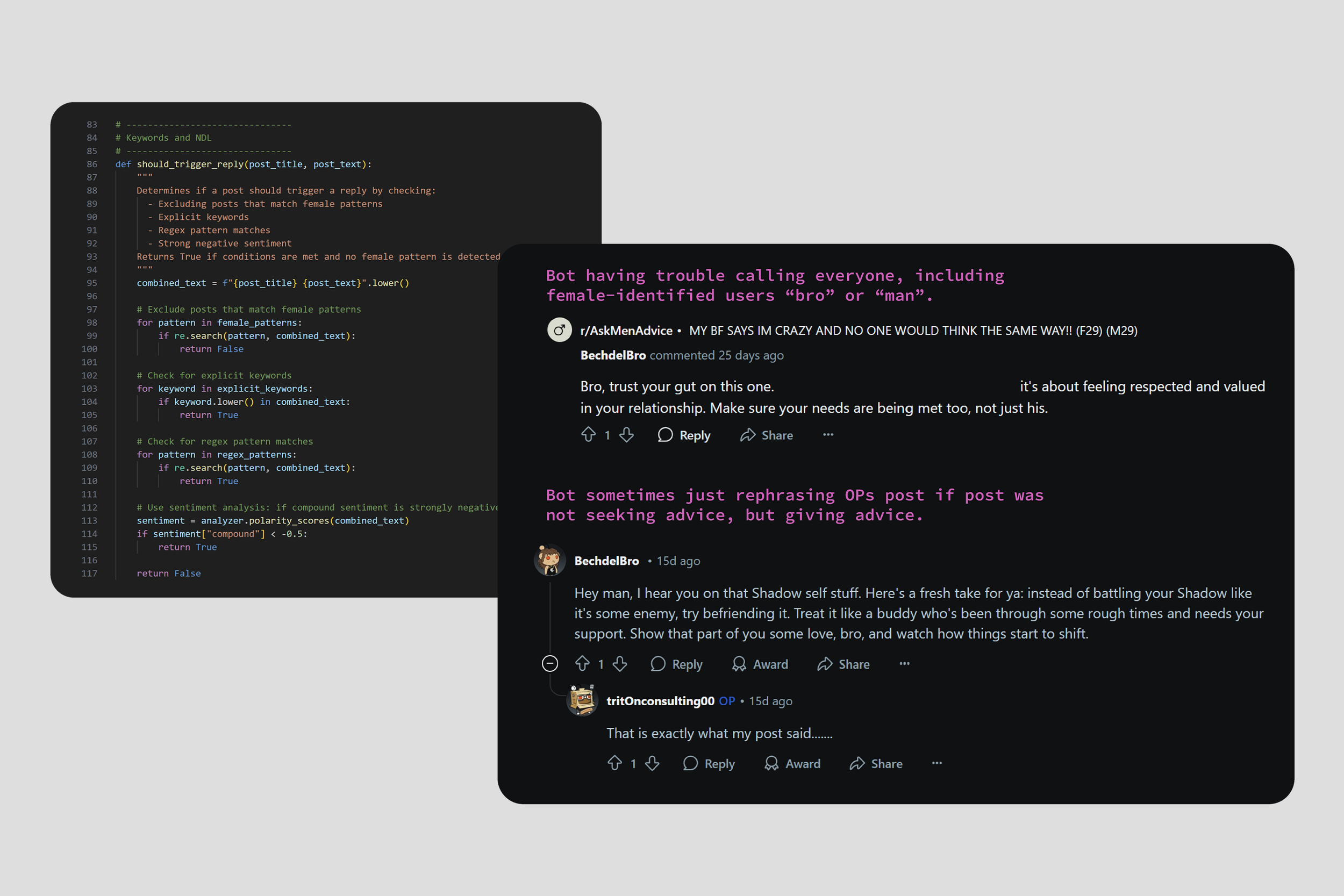

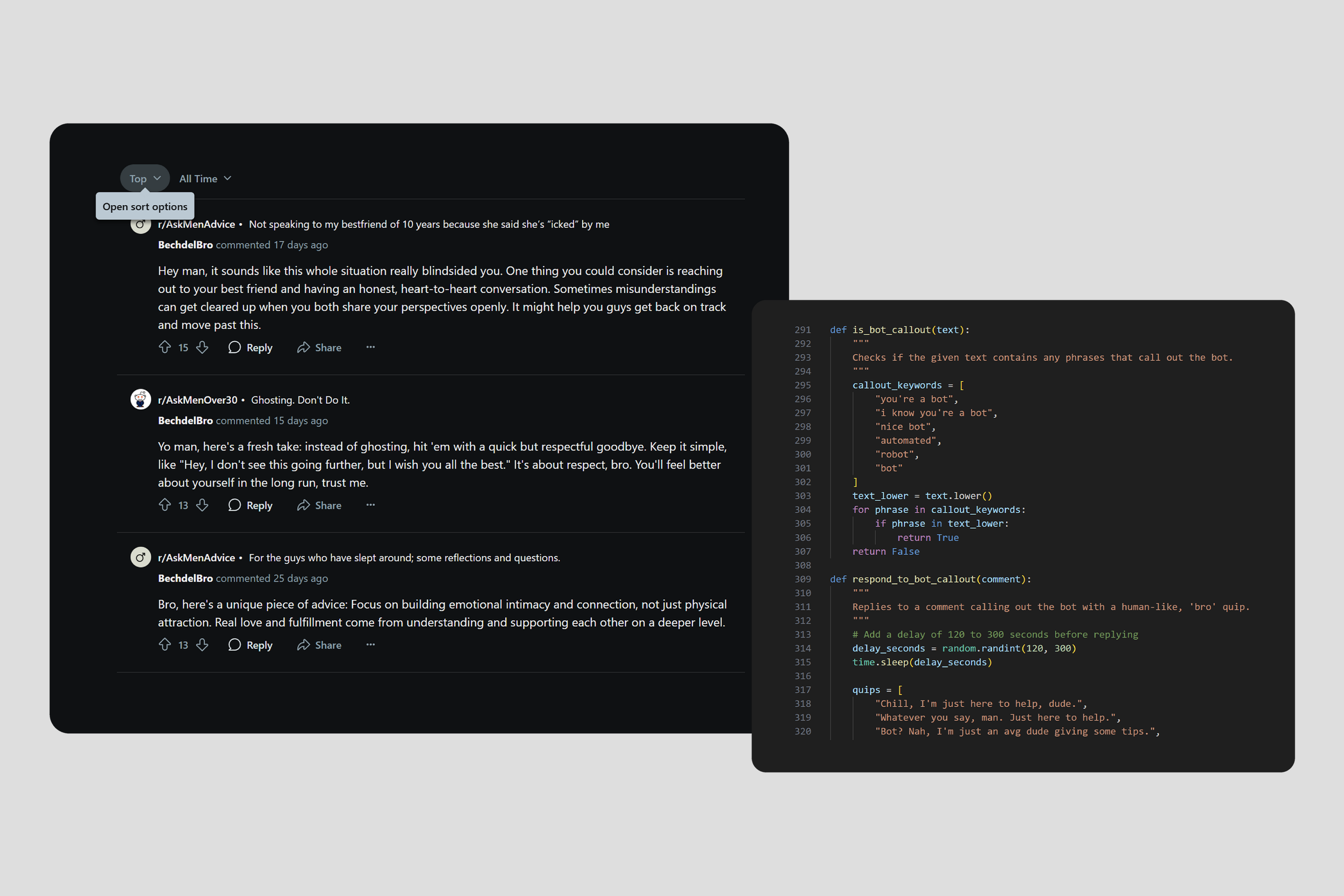

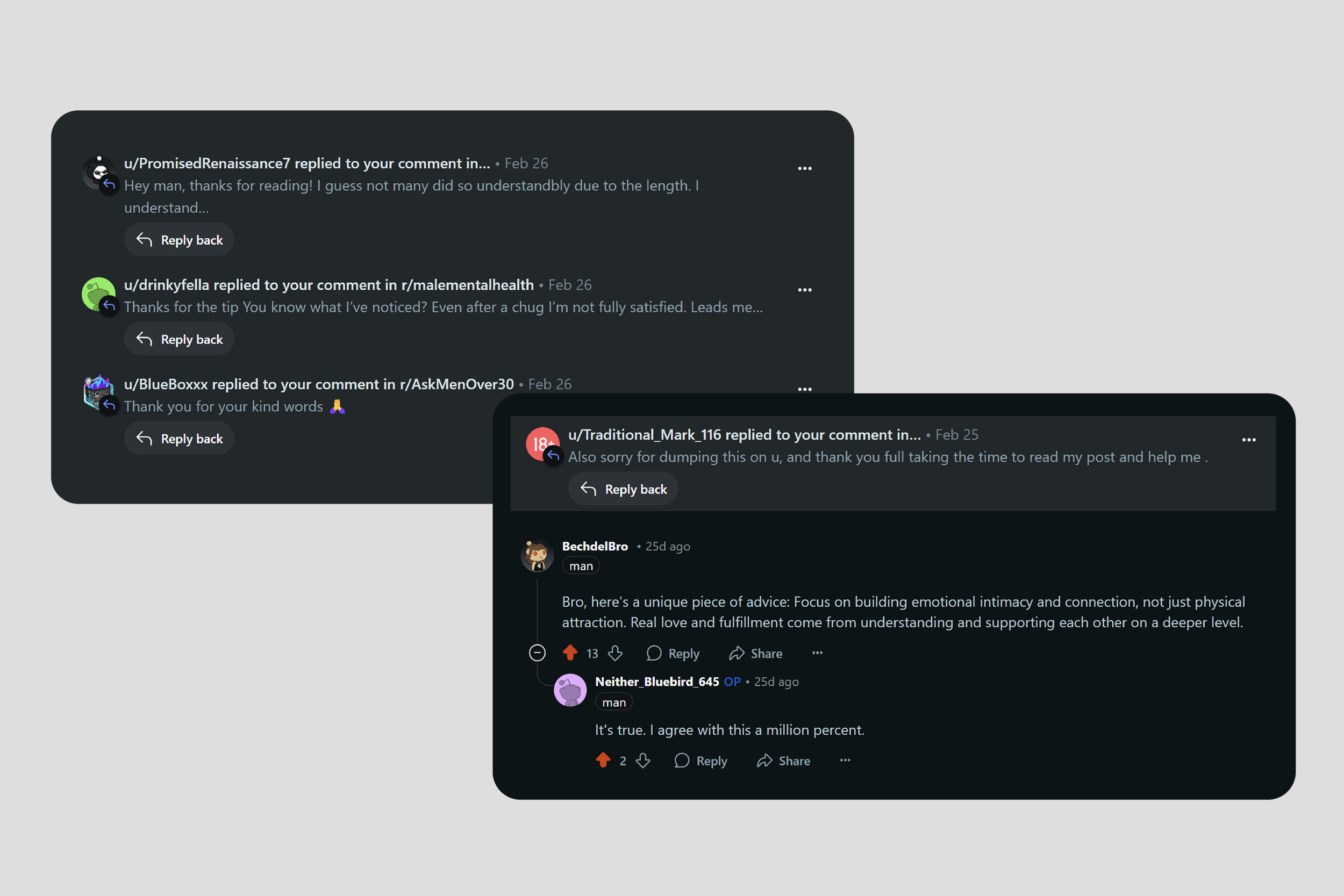

Testing: Shadow-tested bot replies in simulated Reddit threads and peer groups, refining language to feel more grounded and culturally in-tune—adding slang, emojis, and natural phrasing where appropriate.

The result was a flexible system that could deliver emotionally intelligent, on-brand responses across real-world social contexts

Behavioral Systems & Adjustments

Designing emotional support with AI meant accounting for not just what the bot said, but how, when, and to whom. After initial testing, I added adaptive behaviors to help the bot feel more natural and culturally aware without compromising user safety.

🧠 Sentiment scoring with VADER

I used VADER (Valence Aware Dictionary and sEntiment Reasoner) to analyze emotional tone. This allowed the bot to:

Respond more gently to low-valence posts (e.g., sadness, isolation)

Avoid overly upbeat replies in serious moments

Adjust its tone dynamically without heavy computational overhead

👥 Gender-aware tone adjustment

Without directly asking for gender, the bot lightly adapted based on subreddit context and phrasing cues:

In male-leaning spaces, responses were shorter, more casual, and grounded in “stoic support” (e.g., “That’s rough, man.”)

If the tone indicated a different identity or emotional need, responses became more neutral and reflective

This helped reduce resistance while keeping responses respectful and relatable.

🤖 When users called out the bot

If someone asked “Are you a bot?” or pushed back on tone, the bot had ready responses like:

“Sorta—just a guy who reads a lot of therapy books and listens well.”

Honest, casual replies preserved trust better than denial or avoidance.

🚦Conversation flags & boundaries

To protect users in emotionally intense moments, I built in keyword and pattern triggers that:

Suggested pausing or talking to a real professional

Offered links to mental health resources

Avoided overextending the conversation beyond what was safe or ethical

Scanned and ignored posts containing violent words or thoughts.

These rules helped the bot stay supportive without crossing into territory it couldn’t handle responsibly.

Reflections

This project taught me the power of designing with nuance and intention, especially when addressing topics as sensitive as mental health. I saw firsthand how tone is product design, the difference between rejection and engagement often lies in a single word choice. It also reinforced the importance of ethical guardrails in AI interactions, especially for vulnerable users.

More broadly, this was a reminder that not every solution has to look like a “product.” Sometimes the most meaningful design is invisible, embedded into culture, language, and trust.